The Spielmobile is a robot that can drive around wirelessly while transmitting a camera feed and will soon be

capable of path planning, localization and mapping thanks to an onboard Lidar unit. We also plan to experiment

with machine vision later down the road. The robot is controlled by an ARM microprocessor and a raspberry pi

and uses two gearmotors with quadrature encoders offering 8400 counts per revolution for precise maneuvering.

I worked on this project with two other Mechatronics Engineering students

(Will Clark and Tom Meredith). I took care of all the mechanical aspects

of the project including all the design, sourcing, machining, and assembly/maintenance. I used SolidWorks

extensively to generate models and assemblies of the various components of the design and gained considerable

experience working with configurations and subassemblies (note the screws in the rendering below but not in

the model above to reduce loading time). Through each iteration of the design process I consulted with the

student machine shop technicians on what could be machined with the equipment available and modified the

design accordingly. I should mention that I obtained CAD models of a few components straight from the

manufacturers to increase model accuracy so I cannot claim all the work as my own. I ordered all the off

the shelf components, purchased the required aluminum on campus, and machines all the parts myself. I

spent a significant amount of time in the student machine using lathes, mills and various saws and

grinders to create what is shown below.

As soon as I had the chassis assembled we had to try it out. This is just a brief clip of it on laminate floor and carpet. At this stage none of the electronics were onboard and we just attached some 9V batteries. The motors are rated for 12V and we use lithium polymer batteries so the robot’s top speed is actually a little faster than what’s shown below.

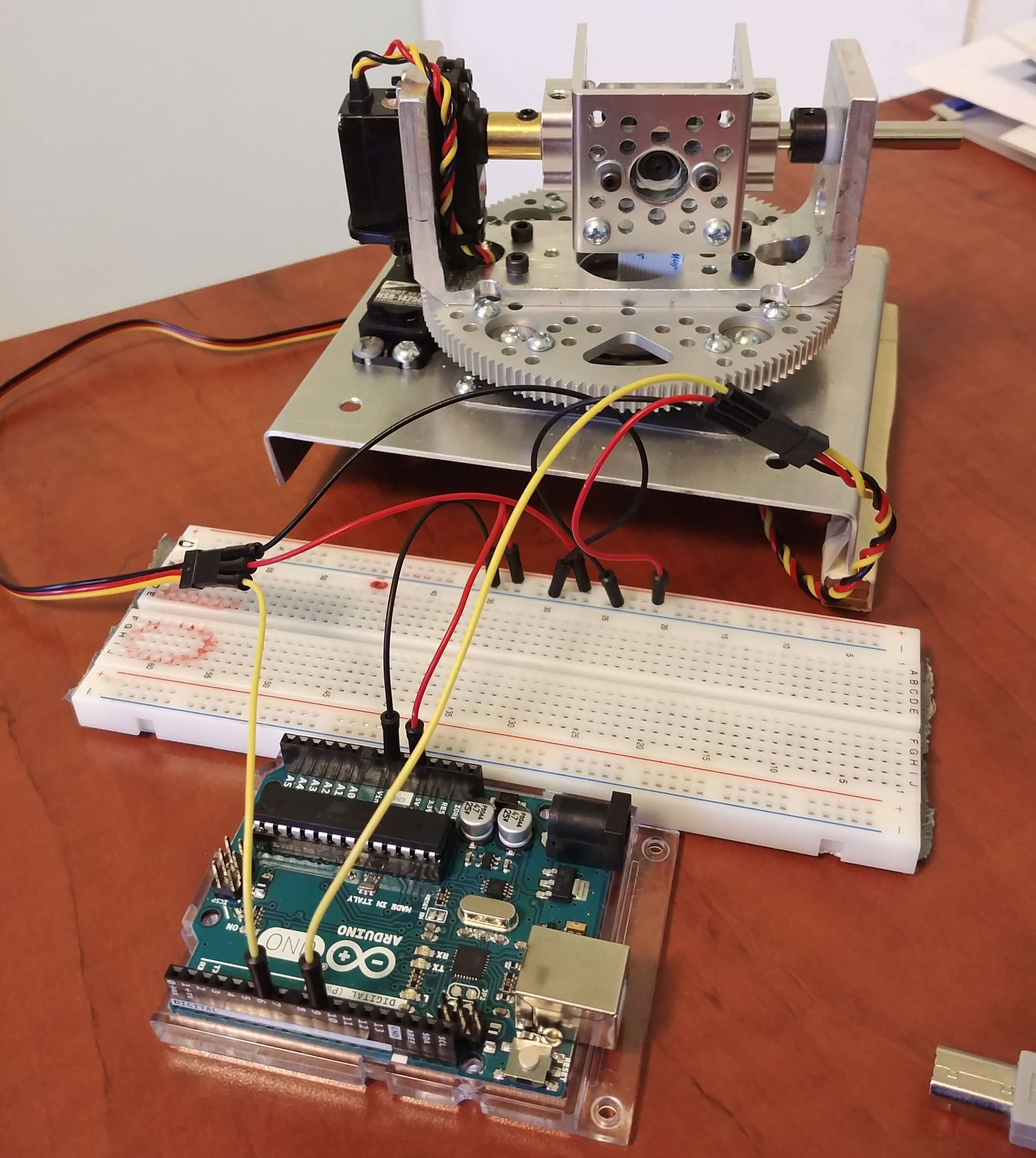

Once the mechanical work was done I began tinkering with the camera gimbal. The yaw of the camera is controlled by a continuous rotation servo which rotates the large gear fixed to a turntable bearing. The pitch of the camera is controlled by a standard digital servo with high holding torque and fairly high resolution to keep the camera steady while the robot drives around. I wrote a simple Arduino program to take commands through a serial interface and drive the two servo motors (provided the commands would exceed the servo limits) and report back the current camera angles and speeds to the connected raspberry pi. Then I learned enough Node.js to turn my raspberry pi into a webserver and connect to serial devices. I created a website that featured the camera feed in the center of the screen and displayed the camera’s current position and speed for both axis as well as the current command (‘up’, ‘down-left’, ‘idle’ etc.) along the bottom. I used web sockets to receive commands from the users computer in real time and set up the port forwarding on my router to make my raspberry pi globally accessible. To test out my interface, while I was in Waterloo I had a friend in Ottawa go the website and drive the camera around while watching the feed. Altogether it was an interesting sub-project and I learned a lot about web servers while becoming a little more comfortable with web development in general.

Moving forward Tom Meredith is working on the SLAM algorithms (simultaneous localization and mapping) to use the Lidar to guide the robot autonomously but my part in this project is complete.